Bandwidth Delay Product and TCP Tuning

There are many factors that limit the available bandwidth of a network link from point A to point B. Knowing and expecting something reasonably close to the theoretical maximum bandwidth is one thing. However, the latency of the link can vastly affect available throughput. This is called the Bandwidth Delay Product. It can be thought of as the “memory” of the link as well (although that memory is the send/receive buffers). This came into play when I was setting up a global monitoring system for my client’s geographically diverse data centers.

Warning: Math

The Bandwidth Delay Product, $BDP$ is found by multiplying the theoretical max throughput of the link, $BW$ by the Round Trip Time, $RTT$.

$$BW \times RTT = BDP$$

For example, a 100Mbps link at 80ms latency:

$$\frac{12.5 MiB}{seconds} \times 0.08 seconds = 1 MiB$$

This indicates that the TCP buffers on either side of the link must be able to store 1 MiB of data. There are many, many places in modern code where the default TCP buffer size is set to 64 KiB.

$$\frac{64 KiB}{0.08 seconds} = 800 KiB/s = 6.25 Mbps$$

Ouch. That 64 KiB buffer size really hurt the throughput on the 100 Mbps link. In fact, 64 KiB is a really painful for trans-continental links.

In Pictures

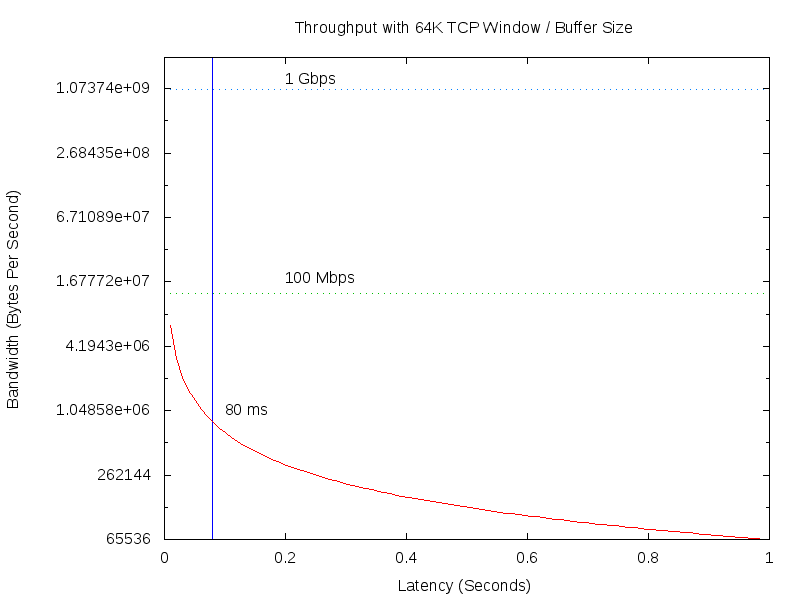

Below is a logarithmic graph of bandwidth vs latency at 64 KiB buffer sizes. The curve is available bandwidth and the blue vertical line is at the 80ms point.

Solutions

Check your socket code. There may be assumptions, limitations, or a configuration option in the code.

Linux kernel wise, most modern distributions come fairly well tuned for TCP performance. You may need to tune the default and maximum memory that can be allocated to a socket:

net.core.rmem_defaultnet.core.rmem_maxnet.core.wmem_defaultnet.core.wmem_max

Also, the TCP minimum, initial, and maximum buffer size. These settings also apply to the TCP protocol in IPv6.

net.ipv4.tcp_rmemnet.ipv4.tcp_wmem

In Conclusion

I enjoy technical solutions to problems. If there’s a little math involved and a trick or two, I tend to follow that path for a solution. But there is one thing that will affect your TCP throughput even more than the Bandwidth Delay Product. Be absolutely certain that the other side of the connection is reading fast enough. TCP is designed to put back pressure on the writer if the reader isn’t fast enough or purposely slows down.

Armed with this knowledge, and a few tuning options I chased a problem for too long. In reality, there was some network congestion in a trans-continental link (no surprise there) and the tool I was using had a bug. It would block on TCP writes in its event loop, which caused it to slow down on reads, which mean the daemon on the other side would block on TCP writes.

Now, if only finding that bug was as simple.

Notes

- I use an OSS project called Merlin to build scalable, highly available, and redundant Nagios / Naemon solutions. New versions appear to have a bug: https://github.com/op5/merlin/pull/4

- More information about BDP: https://www.switch.ch/network/tools/tcp_throughput/

- GNUPlot incantations:

f(x) = (64*1024)/x

set ylabel "Bandwidth (Bytes Per Second)"

set xlabel "Latency (Seconds)"

set title "Throughput with 64K TCP Window / Buffer Size"

set logscale y 2

set key off

set label "100 Mbps" at .2,18107200

set label "1 Gbps" at .2,1.298576e9

set label "80 ms" at 0.1,1.04858e6

set arrow from 0.08,65535 to 0.08,2.097152e9 nohead lc rgb 'blue'

set terminal png size 800,600

set output 'output.png'

plot [0:1] [65536:2.097152e9] f(x), 13107200 with dots, 1.048576e9 with dots